If acomputerwere human, then itscentral processing unit(CPU) would be its brain. A CPU is a微处理器-- a computing engine on a chip. While modern微处理器sare small, they're also really powerful. They can interpret millions of instructions per second. Even so, there are some computational problems that are so complex that a powerful microprocessor would require years to solve them.

Computer scientists use different approaches to address this problem. One potential approach is to push for more powerful microprocessors. Usually this means finding ways to fit moretransistorson a microprocessor chip. Computer engineers are already building microprocessors with transistors that are only a few dozennanometerswide. How small is a nanometer? It's one-billionth of a meter. A red blood cell has a diameter of 2,500 nanometers -- the width of modern transistors is a fraction of that size.

Advertisement

Building more powerful microprocessors requires an intense and expensive production process. Some computational problems take years to solve even with the benefit of a more powerful microprocessor. Partly because of these factors, computer scientists sometimes use a different approach:parallel processing.

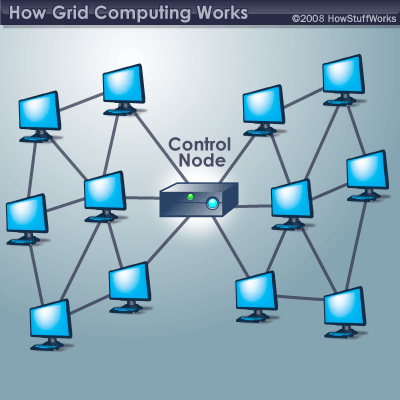

In general, parallel processing means that at least two microprocessors handle parts of an overall task. The concept is pretty simple: A computer scientist divides a complex problem into component parts using special software specifically designed for the task. He or she then assigns each component part to a dedicated processor. Each processor solves its part of the overall computational problem. The software reassembles the data to reach the end conclusion of the original complex problem.

It's a high-tech way of saying that it's easier to get work done if you can share the load. You could divide the load up among different processors housed in the same computer, or you could network several computers together and divide the load up among all of them. There are several ways to achieve the same goal.

What are the different approaches to parallel processing? Find out in the next section.

Advertisement